10 Practical Lessons for Business from the DTA Government AI Guidelines

Last week, I received an email from a client saying they've had a "business-wide instruction to pause all AI usage while we roll out an AI Policy for all staff".

Whilst I applaud them for doing this, it’s been nearly 2 years since generative AI became widely used and accessible, and many, if not most businesses we speak to, still do not have a policy (or notably guidance to staff) for what the plan for generative AI adoption is.

Contrastingly, earlier this week the governments Digital Transformation Agency (DTA) released a Policy for the responsible use of AI in government. I’ve read the policy and find there are 2 aspects in particular that make the Policy impactful.

It provides government agencies with a very clear and purposeful framework - Enable, Engage, Evolve

There is clarity around timeframes and specific actions government agencies need to take, with a focus on people (e.g. training)

At Time Under Tension, we crafted our own Responsible AI Charter when we set the business up nearly 2 years ago. At the time, we based the charter on the long released (2019) yet undervalued (in my humble opinion) Australia’s AI Ethics Principles.

Ten things businesses can take from the DTA policy

So, what can businesses learn from the DTA Policy?

Reflecting on the policy, and the relatively low adoption of responsible generative AI in business today, there are a few things which we can learn from government. Some of these things are likely to turn into policy over the coming months and years, as is already happening in the EU, with the EU AI Act now in effect.

The ten things below have been directly extrapolated and influenced by the DTA policy.

1. Educate and Train Your Employees

AI Fundamentals: Begin by educating your workforce about AI - what it is, how it works, and the potential impacts on your industry. This foundational knowledge will empower your team to better understand AI’s capabilities and risks.

Ethics and Compliance: Offer training on AI ethics, privacy, and compliance. Ensuring your team is aware of the ethical considerations and legal frameworks is essential as you move towards AI integration.

What we're seeing: Leading organisations are leaning in to understand more about generative AI.

At Time Under Tension, we've conducted over 50 generative AI Inform & Training sessions to leading Australian businesses

2. Develop a Clear AI Strategy

Assess Business Needs: Identify specific areas within your business where AI could add value, such as automating routine tasks, enhancing customer service, or improving data analysis.

Set Responsible AI Guidelines: Establish clear guidelines for AI use, including data handling practices, LLM training requirements, bias mitigation, and transparency to ensure responsible AI adoption and selection of the right tools for your business.

3. Implement Strong Data Governance

Data Quality and Management: Focus on improving the quality and management of your data. Since generative AI tools thrive on data, well organised and high-quality data is crucial for successful AI implementation.

Privacy and Security: Strengthen data privacy and security measures. Implement robust encryption, access controls, and data breach response plans to safeguard sensitive information.

4. Start Small with AI Pilots

Pilot Projects: Launch small-scale AI pilot projects with clear objectives and measurable outcomes. This allows you to test the waters and learn how AI can benefit your business without significant risk.

Learn and Iterate: Use insights from these pilots to refine your AI strategy. Address any challenges before scaling up to more complex implementations.

What we're seeing: Enterprise frameworks like Microsoft Azure, Amazon Bedrock and Google Vertex AI are making it much easier to deploy generative AI apps securely and rapidly.

We've been building generative AI products for clients in areas like content automation, marketing generation and new conversational product search experiences

5. Build a Diverse and Skilled Team

Hire or Upskill Talent: Start building a team with AI expertise, either by hiring new talent or upskilling your current employees. A diverse team with skills in data science, machine learning, and ethics will be crucial for responsible AI use.

Foster Collaboration: Encourage collaboration between AI experts, IT, legal, and business teams. This ensures AI initiatives align with broader company goals and comply with legal and ethical standards.

What we're seeing: Some of our progressive clients have established AI steering groups, made up of a diverse representation of roles and levels across the business or departments. These steering groups are tasked with taking charge of the AI roadmaps and ideas.

6. Establish a Risk Management Framework

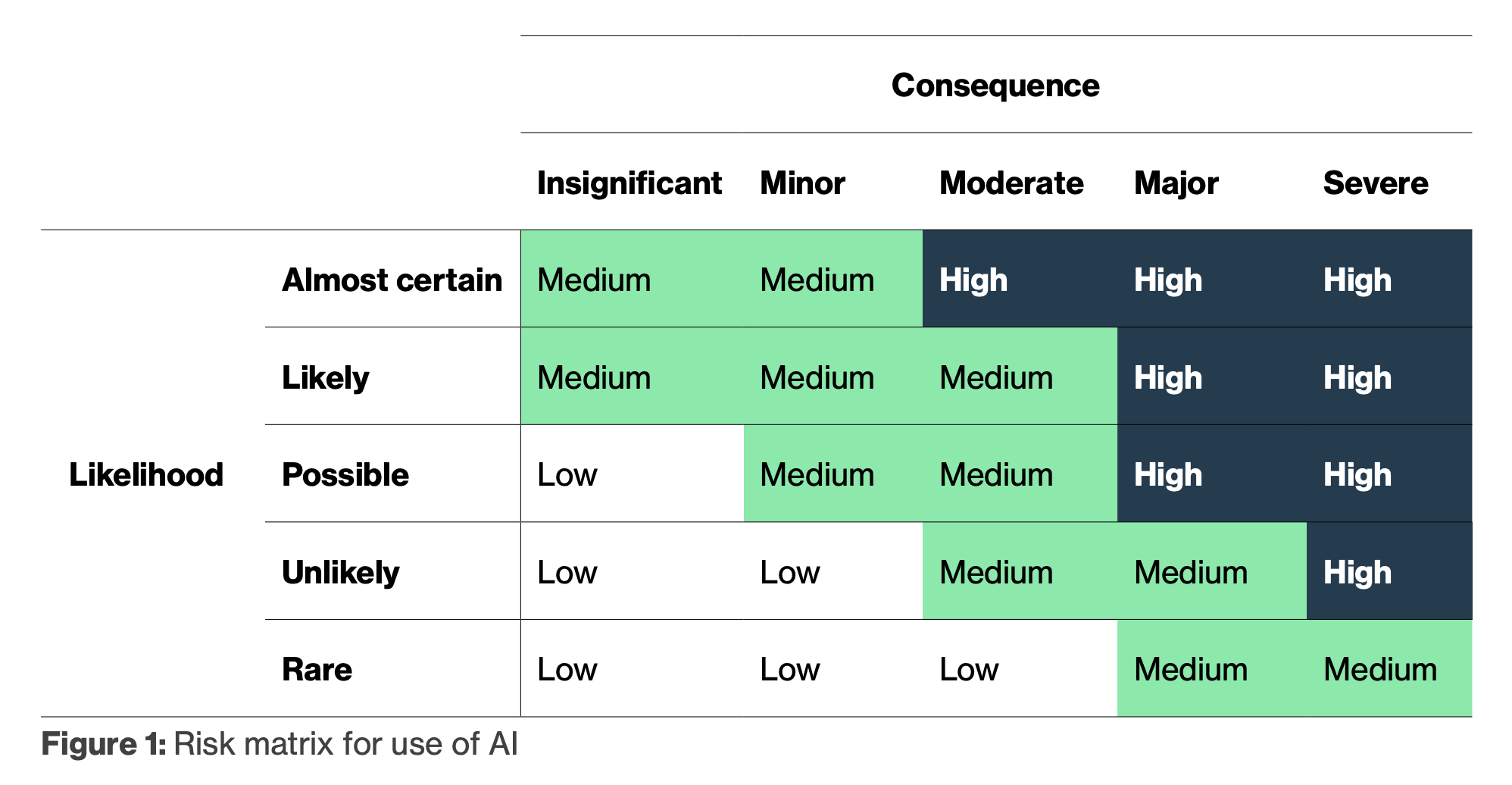

Risk Assessments: Do you know where the risks are in your business from generative AI use? Conduct regular risk assessments to identify potential AI-related risks such as bias, security vulnerabilities, or reputational damage. Established frameworks can guide these assessments.

Mitigation Plans: Develop and implement mitigation plans for identified risks. Include contingency plans for data breaches, AI errors, or public backlash to be prepared for any eventuality.

What we're seeing: Generative AI is ushering in a new wave of risks, from model bias to security and fraud as has been well documented by Deepminds recent 'Mapping the misuse of generative AI' report

The DTA Policy sets out an interesting framework for risk assessment with plenty of examples too.

7. Monitor Industry Trends and Regulations

Stay Informed: Keep up with industry trends, AI advancements, and regulatory changes. Staying informed will help you anticipate challenges and adapt your AI strategy as needed.

Engage with Regulators: Build relationships with regulatory bodies and participate in industry forums. This proactive approach ensures you stay ahead of compliance requirements and best practices.

8. Foster a Culture of Innovation and Responsibility

Encourage Innovation: Cultivate an environment that encourages experimentation with new technologies, including AI. Balancing innovation with ethical considerations is key to successful AI adoption.

Promote Transparency: Ensure transparency in your AI initiatives. Make AI decisions explainable and keep stakeholders informed about how AI is being used within your organisation.

9. Plan for Scalability

Scalable Infrastructure: Invest in scalable infrastructure, such as cloud computing and data storage solutions, to support future AI initiatives.

Scalability Strategy: Develop a strategy for scaling AI across your organisation. Apply lessons learned from pilot projects to larger implementations for smoother transitions.

What we're seeing: There are many new and emerging generative AI tools and API's from the LLM providers, making it easy to trial and experiment, before committing to larger transformations.

Part of the support we provide to our clients is helping with 'Build vs Buy' decisions.

10. Build Partnerships with AI Experts

Collaborate with AI Firms: Consider partnering with AI firms or academic institutions to gain access to expertise, resources, and innovative solutions.

Join AI Networks: Engage with AI-focused industry groups or networks to share knowledge, stay informed about best practices, and collaborate on AI-related challenges.

Want to get started?

We work with agencies, companies and brands to elevate Customer & Employee experience with generative AI. Our advisory team help you to understand what is possible, and how it relates to your business. We provide training for you to get the most of generative AI apps and tools. Our design & technology team build bespoke gen AI tools to meet your needs.

You can reach us here: www.timeundertension.ai/contact