Let’s chat about (building) chatbots!

Issue 5 of our newsletter explores LLM-driven chatbots with some quick tips for how to build one today

Chatbots have long been seen as a holy grail for driving online customer experiences, yet the reality has fallen short. Whether it’s the pre-scripted nature of their limited abilities or rapid handing over to a human, these solutions built on now legacy technology, have delivered suboptimal user experiences.

However, Large Language Models (LLMs) are heralding a new era of possibilities for the resurgence of chatbots. With multi-modal, multi-lingual, sentiment aware, dynamic and contextual conversational capabilities, LLM tools look very well placed to solve many of the issues with legacy bots.

Despite the potential LLMs promise, these are early days, and while brands explore, some are rushing out LLM powered chatbots at their peril, as these new tools come with a range of new challenges which need to be considered.

HSBC’s Kaya chatbot demonstrating a not unusual traditional chatbot experience

Pre-scripted vs Non-deterministic chat-bots

In the old way of building automated chat, the core of building the solution involved the pre-defining of questions and conversation flows. When a user deviates from these questions (e.g. asking for multi-step actions like “tell me my balance and increase my limit”) the bots quickly reach their limits, defaulting to providing links or offering to pass on to a human.

With LLM powered conversations, the challenge is in keeping the LLM on track. There are no shortage of examples gone wrong, where brands have rushed out LLM powered chatbots without the required safeguards.

DPD Chat making a typical LLM blunder here

Knowing how to write, promote and implement safeguards (e.g. managing hallucinations, securing of personal data etc) and training an LLM to structure a human-like conversation, is critical to building truly smart chatbots.

Combined with AI agents (see previous post on this), LLM powered chatbots can be empowered to not only converse, but undertake and complete actions too. This opens up a whole world of autonomous bot driven interactions.

Responsible and ethical chatbots

While governments are still working their way through legislation around use of LLMs, a few prevalent themes are emerging for those wanting to practise responsible and ethical AI (which should be everybody playing in this space!) with the chatbots they create.

Transparency and Trust: LLM bots blur the line between human and machine led conversations. Disclosing a chatbot's nature and limitations (and the fact that they're talking to a chatbot) is key to avoiding misleading users. Some social commentators like Yuval Harari are calling for tough laws around this space.

Bias and Fairness: Large language models have been trained on data which contain biases. As a result of this, they will often present a biassed view of the world. Training or fine-tuning the models on diverse datasets is one way of minimising the perpetuating of societal biases, as is smart prompting and guardrails to offset the inherent biases in the models.

Privacy and Security: Many early stage LLM based chatbots (including the 1,000’s of GPTs in the GPT Store) are being used without disclosing how user conversations and uploaded documents are being stored. In the case of OpenAI, while there are ways to “toggle on” privacy, by default everything a user types and uploads is being used to train the model.

Democratisation and Risks

With the fast moving tide of LLMs emerging for users to chat with, use for work and build on the barriers to entry for untrained users has been drastically lowered. The importance for responsible usage and building of LLM powered chatbots has never been greater.

The potential for LLMs to be used for spreading misinformation or manipulating public opinion, is real. In what will be seen as the first shot over the bow, OpenAI this week were forced to pull a GPT from the GPT Store and ban the ‘developer’ (no coding involved!) for creating a Dean Phillips Bot.

LLMs have lowered the bar to build meaningful bots and agents, this will change the nature of work and our interactions with the world around us. The need to understand how to use and build on these emerging technologies will be vital in keeping us safe.

Building an LLM powered bot

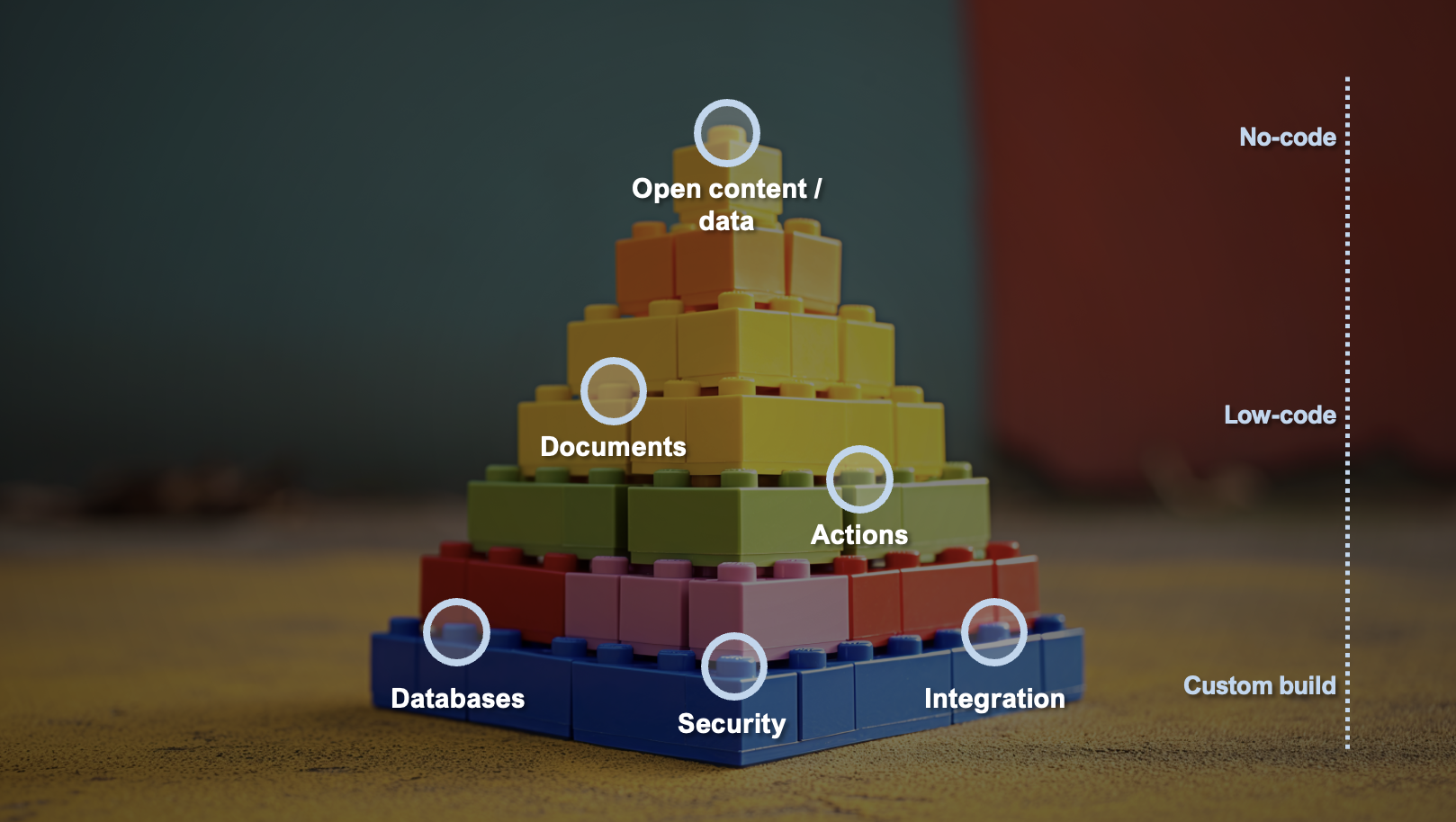

Creating a chatbot has never been easier and there are three approaches people and companies can take: “no-code”, “low-code” and “custom build”.

The route you take depends on how much you need your chatbot to do. The more building blocks (e.g. documents, third party data sources, security considerations etc) your chatbot needs to work with, the more you’ll lean towards a custom build option. But if your chatbot needs limited functionality (e.g. to only be trained on some personal data), then a no-code or low-code option could suit you.

Let’s explore these options.

Build a “no-code” bot, today

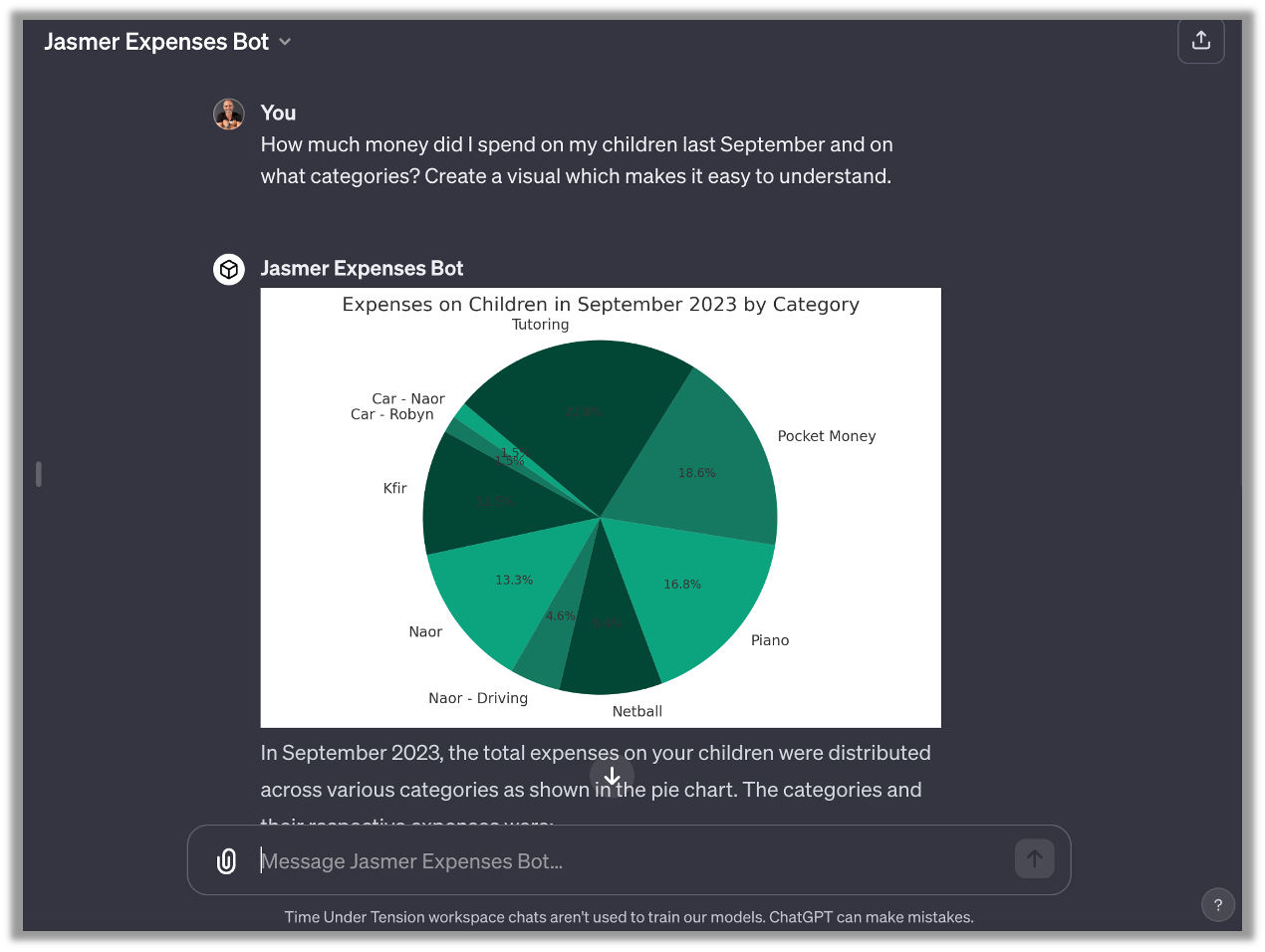

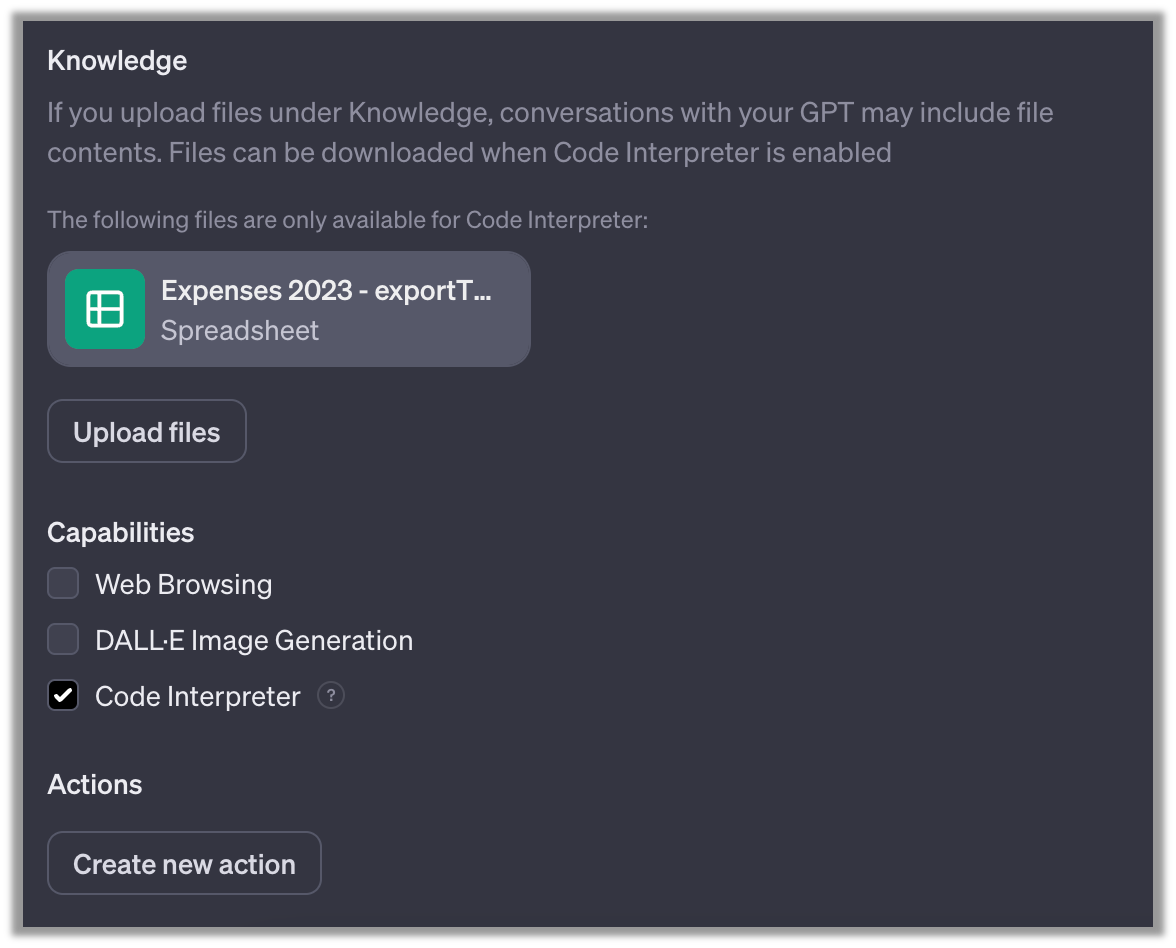

OpenAI GPTs are super powerful, in minutes you can train a chatbot on your own content (expenses, family recipes, a training course etc) and have a chat about it.

In the example below, I’ve created a GPT, which is equipped with a year's worth of my personal finances (stripped and anonymised). I am now able to ask ChatGPT in plain English for a chat about my living expenses.

It’s worth pointing out that OpenAI has also built in the ability to create “actions” within your GPTs. Actions allow you to connect your GPT to an API, to extend its capabilities. In this relatively simple example below I built a GPT which connects to Bland.AI (a telephony service), so my GPT is able to make phone calls on my behalf (more on this here).

There are a number of no-code chatbot building tools like ChatGPT GPTs, including Zapier, Botsonic, Dante and Chatbase to name a few (disclaimer: I’ve not tried all of these and there are many more out there!).

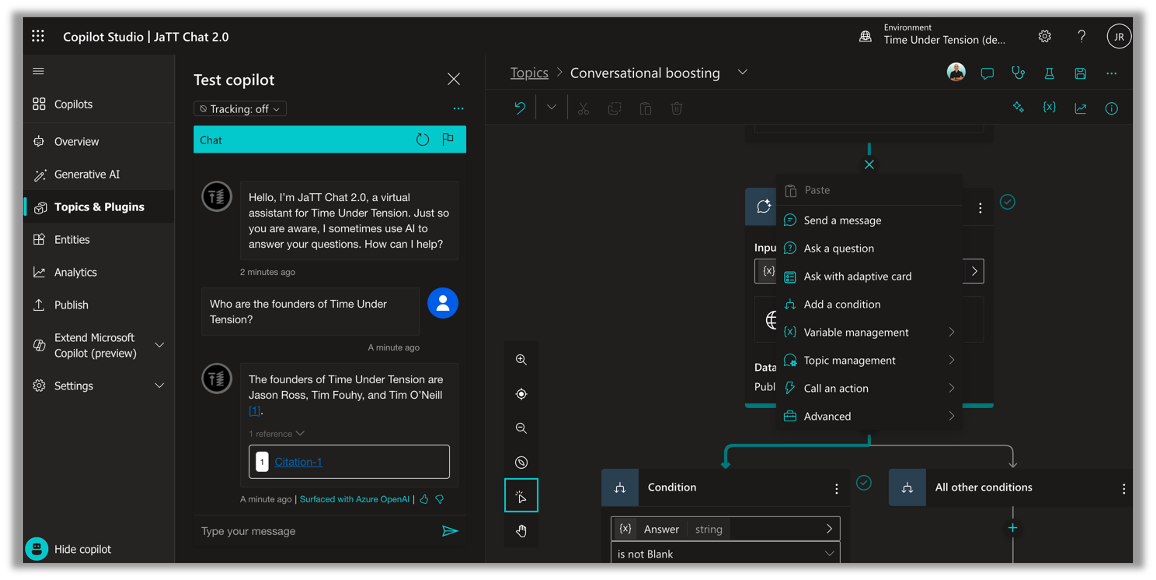

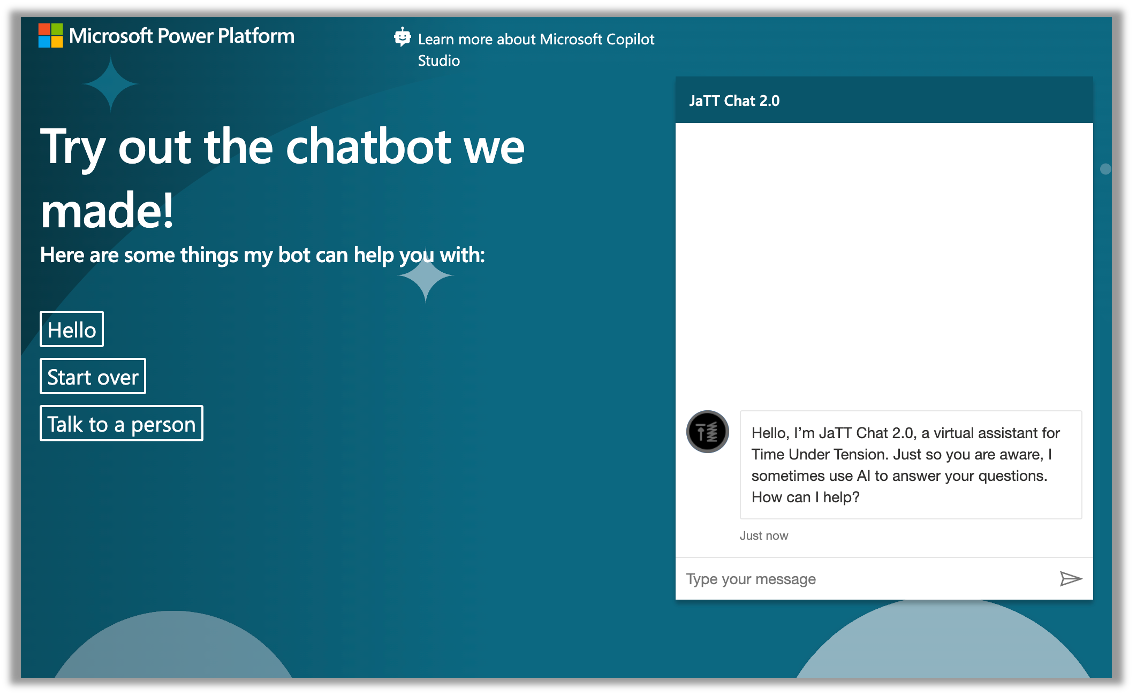

Go further with a “low-code” bot

Since generative AI has become mainstream, there have been a raft of “low-code” tools emerging, including some of those listed above. Low-code tools allow you to create more advanced bots with little coding experience (although it helps) through easy to use ‘drag and drop’ style interfaces.

Below example shows the interface of one of these low-code tools, Microsoft Copilot Studio (albeit there are many others), offering simple user interfaces, allowing you to work through the process of creating your chatbot, equipping it with knowledge (documents, data sources, websites etc). The simple drag and drop interface allows you to extend the chatbot capabilities to take actions and integrate with third party data sources.

Custom built bots

For more advanced use cases, or integration into existing systems, a custom built bot might be necessary. This involves developing your own interfaces and LLM integrations. This approach offers maximum flexibility and control, enabling the bot to be tailored to specific business needs or unique user interactions.

For one client we built an RFP automation tool, which reads the content of tender requests and automates the proposal writing process for them. The need for the system to be secure, integrated into their CRM, and work with vast amounts of content meant that a custom built solution was the only way to achieve all the requirements.

The future is here

LLMs are delivering a tsunami of capability, in many cases capability which requires zero coding knowledge to use, misuse or abuse at your peril. Some quick pointers;

Assess whether you need a “no-code”, “low-code” or “custom build” solution, typically dependant on how many lego blocks you need to consider

Understand the LLMs being used (what were they trained on, what are the potential risks)

Implement guard rails and error handling into the behind the scenes prompts

Transparently communicate to users the privacy and AI enabled element of the conversational tool

Start experimenting with the tools available today

The key to leveraging and harnessing any technological advancement, is understanding the technology as a starting point. With the barrier to entry so low, there is no excuse to stay uninformed and every reason to get excited about what’s coming.

We work with agencies, companies and brands to elevate your Customer Experience with generative AI. Our advisory team help you to understand what is possible, and how it relates to your business. We provide training for you to get the most of generative AI apps such as ChatGPT and Midjourney. Our technical team build bespoke tools to meet your needs. You can find us here www.timeundertension.ai/contact

A handful of Gen AI news

Here are five of the most interesting things we have seen and read in the last week;

RunwayML launches motion brush allowing high fidelity of control over image to video creation

Amazon is adding an AI shopping assistant to the browsing experience

Have AI help map your career options using Wanderer

Change clothes with AI using Stable Diffusion

In case you missed our last email update: Exploring the limits; 4 ways to use generative AI for product photography