OpenAI’s Latest Updates: Why Should I Care?

This week OpenAI held its Dev Day conference in San Francisco, a first-of-its-kind developer event for the company since releasing its flagship ChatGPT model publicly. The livestream for the event can be found here.

With so much happening in the AI space these days, it can be overwhelming at times to keep up. In this article, let’s break down some of the important OpenAI product updates and why you should care about them.

Context Window Increase

GPT-4 is finally receiving its Turbo counterpart, akin to GPT-3.5-Turbo model which was released back in March. Not only is it 2-3x cheaper than GPT-4, but it also boasts an impressive 128k context window. For reference, 128k tokens is roughly 300 pages of content. This means that we could fit the entirety of Harry Potter and the Prisoner of Azkaban (107,253 words) in one single message!

When it comes to token limits, we are truly witnessing exponential growth in action. When GPT-4 first made its debut back in April 2023, it had a token limit of 8,192. Then with the release of GPT-4-32k this limit got raised to 32,768 tokens. Today, we have a limit of 128,000 tokens.

Trying to come up with nifty ways to bypass the token limit is futile as the token limit today will most certainly be at the very least doubled in a years’ time.

More Recent Knowledge Cutoff

For applications that rely heavily on GPT having access to the most up-to-date information, you’re in luck. GPT now has knowledge of world events up to April 2023. Quite a bit has happened in two years: Covid restrictions eased, Queen Elizabeth II passed away, Argentina defeated France in the world cup to name a few. It’s safe to say that there was a ton of catching up for GPT’s understanding of world events.

Assistants

There is a lot to unpack here.

At the highest level, OpenAI’s Assistants API is the company inching one step closer to Artificial General Intelligence (AGI). Assistants can be customised to include their own set of instructions, tools, and knowledge bases.

Before Assistants, developers had to get clever with how to feed additional data into GPT’s context window such as leveraging the power of vector databases.

Today, assistants now have the ability to retrieve data from files that you give them access to. Behind the scenes, they’re still using vector retrieval methods to filter for relevant data to include as context; however, it’s nice to have an easy-to-use feature like this baked into the assistants.

Currently, assistants can read CSV, HTML, Word documents, JSON, markdown, plaintext, PDF, and an assortment of code documents in a variety of languages.

Additionally, the model can now make several function calls in a single message. Before, GPT would only be able to execute one function call at a time. Now, GPT understands that achieving one task might require multiple tools and it uses these tools sequentially until the job is done.

As an example, we set up a sales assistant chatbot for Salomon skis (not sponsored). We inputted a CSV file containing a selection of 10 available skis, and then asked the Assistant to help us shop:

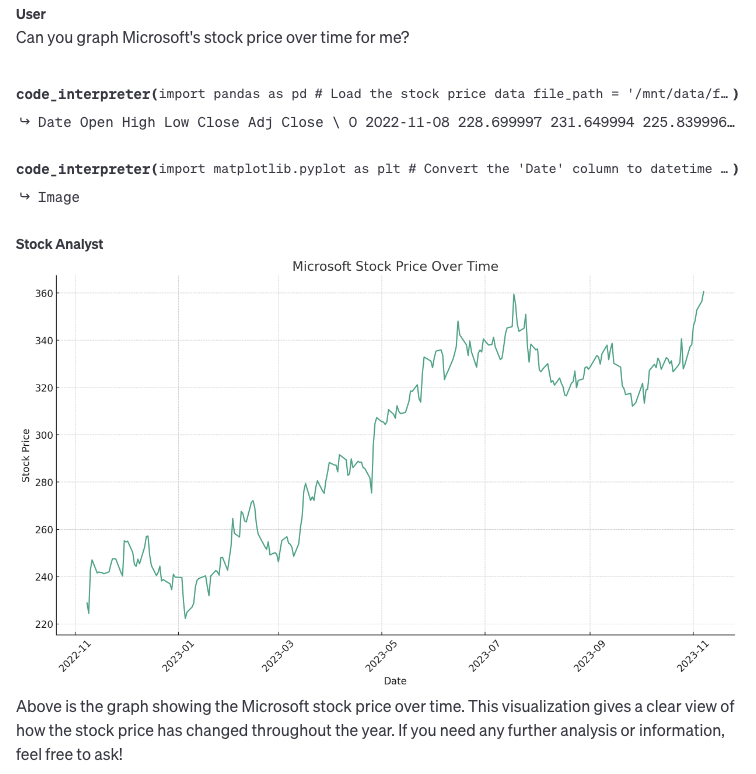

Assistants can also act as a coding sidekick, enabling you to always have a Python programmer at your disposal. As an example, we gave our “Stock Analyst” assistant historical stock data for Microsoft in CSV format. One could create the graph themselves by importing the CSV data into Excel or Google Sheets, but it’s much more fun to ask GPT to do it for you!

Save time by asking GPT to create graphs for you. Not happy with the line colour? Ask it to change it!

API Changes

There are some new features that have been rolled out to OpenAI’s API endpoints. Most notably:

GPT-4 Turbo can accept images as inputs in the Chat Completions API. If you need to be able to generate captions or analyze submitted images in detail, this is definitely something worth checking out.

DALL-E can now be used via API to generate images on the fly.

Text-to-speech is now available via API. With six realistic human voice presets to choose from, you can generate human-quality speech for any piece of text.

Conclusion

It’s nice to see that OpenAI is listening to developer feedback and incorporating it into their products. It is truly amazing thinking about how far GPT has come in the last year alone. More details about OpenAI’s latest technical releases can be found on the blog: (https://openai.com/blog/new-models-and-developer-products-announced-at-devday). These updates have generally been received positively by the developer community as they allow for more customization of GPT for a cheaper price. We are still exploring these new features and will update the blog with interesting use-cases that we develop, stay tuned!